- Estado

Trending Tags

- Municipios

- México y el Mundo

- Política

Trending Tags

- Opinión

Trending Tags

- Más…

Trending Tags

- Estado

Trending Tags

- Municipios

- México y el Mundo

- Política

Trending Tags

- Opinión

Trending Tags

- Más…

Trending Tags

MINUTO A MINUTO

NOTICIAS DEL ESTADO

A tres semanas sin resolverse el conflicto en prepas estatales, marchan maestros por el boulevard

Un grupo de 150 personas entre docentes, alumnos y padres de familia en apoyo a las prepas estatales marcharon por...

Leer más..POLÍTICA

SEGURIDAD

Suma 7 víctimas el presunto feminicida serial de Iztacalco, confirma Fiscalía

La Fiscalía de la Ciudad de México confirmó que, hasta este 25 de abril, el presunto feminicida serial de Iztacalco Miguel N está relacionado con 6 víctimas mortales y una...

Leer más..LA NOTA DEL DÍA

Rescatan a cinco mujeres y dos hombres secuestrados en Jerez

Jerez, Zac., 24 de abril de 2024.- Elementos de la Policía Estatal Preventiva liberaron a cinco mujeres y dos hombres, que habían sido secuestrados horas antes en este municipio, luego de un despliegue policial y...

Leer más..EDUCACIÓN

A tres semanas sin resolverse el conflicto en prepas estatales, marchan maestros por el boulevard

Un grupo de 150 personas entre docentes, alumnos y padres de familia en apoyo a las prepas estatales marcharon por el bulevar Adolfo López Mateos, salieron de la Secretaria de Educación hasta llegar a la...

Leer más..TINTA INDELEBLE

Código político: Fresnillo, capital del secuestro

Por Juan Gómez Con una población de aproximadamente 240 mil habitantes y ubicada en el centro norte del pais, Fresnillo,...

No existe democracia donde hay miedo

A 46 días de que se lleve a cabo la elección más grande de la historia de México, en el...

ENTRESEMANA/ El pobre exministro del presidente

Por MOISÉS SÁNCHEZ LIMÓN O lo que es lo mismo: “quieren bajarme del barco a como dé lugar”, según se...

MÉXICO Y EL MUNDO

A tres semanas sin resolverse el conflicto en prepas estatales, marchan maestros por el boulevard

Un grupo de 150 personas entre docentes, alumnos y padres de familia en apoyo a las prepas estatales marcharon por...

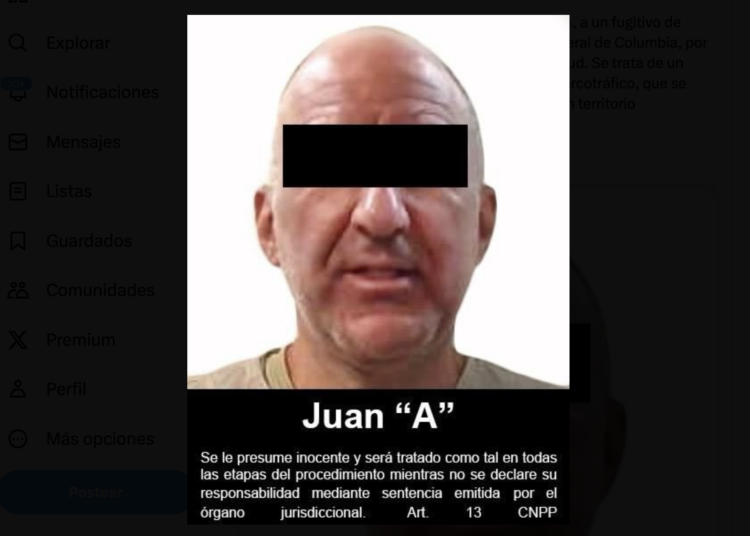

Extraditan a Juan Manuel Abouzaid Bayeh, alias “El Escorpión” del CJNG

La Fiscalía General de la República entregó en extradición a Estados Unidos a Juan Manuel Abouzaid Bayeh integrante de alto...

El Tiempo

Las populares de la semana

-

Ecuador denuncia ante la Fiscalía a Roberto Canseco, diplomático mexicano, por intentar evitar asalto a la embajada

0 Interacciones -

IEEZ organizará 31 debates; 8 solicitudes fueron rechazadas

0 Interacciones -

Suma 7 víctimas el presunto feminicida serial de Iztacalco, confirma Fiscalía

0 Interacciones -

Encapuchados mandan mensaje a AMLO y Sheinbaum: “Esto no es un montaje”

0 Interacciones -

Gobierno de Zacatecas, incapaz de resolver conflicto en prepas estatales; maestros toman Sefin

0 Interacciones

Pórtico en Spotify

Copyright © 2021 Pórtico Mx

ORgullosamente un diseño y desarrollo de Omar Reyes